There are a lot of definitions about Big Data but the one which is still widely used was coined by Gartner :

Big data is high Volume, high Velocity, and/or high Variety information assets that require new forms of processing to enable enhanced decision making, insight discovery and process optimization (Gartner, The importance of Big Data: A definition, June 2012)

This 3V definition is actually not new, it was first coined by Doug Laney in Februari 2001 when he wrote about 3D Data management (See Deja VVVu: Others claiming Gartner’s Construct for Big Data ). In most definitions a fourth V (for veracity) is added and Gartner has recently released a report talking which goes one step further and which talks about the 12 dimensions of Big Data or Extreme Information Management (EIM). Let’s delve a little deeper into these 4 Vs.

Volume – constantly moving target

The size of data requiring to be processed seems to be a constantly moving target. Big data which was initially characterized as a few dozen TBs in a single dataset has now evolved to several PB and the volume seems to be increasing. The current idea is that data is characterized as big when the size of the data breaks barriers for traditional relational database management systems and when the volume prohibits processing the volume in a cost effective and fast enough manner.

There are a number of factors which are driving this tremendous growth. We currently live in an age where most information is “born digital”, it is created, by persons or a machine, specifically for digital use, key examples are email and text messaging, GPS location data, metadata associated with phone calls (so called CDRs or Call Detail Records), data associated with most commercial transactions: credit card swipes, bard code reads, data associated with portal access (key cards or ID badges), toll-road access, traffic cameras, but also increasing data from cars, televisions, appliances – the so called “Internet of Things”. IDC estimated that there existed 2.8 zetabytes (ZB) – where one ZB = 1 billion TB - of data in the world in 2012. 90% of it was created in the past 2 years (IDC Digital Universe study, 2012).

In the near future, the amount of data will only increase with the majority of this increase being driven by machine generated data from sensors, RFID chips, NFC communications and other appliances. According to Cisco CTO Padmasree Warrior, we currently have 13 billion devices connected to the internet, this will increase to 50 billion in 2020 (See some predictions about the Internet of Things and wearable tech from Pew Research for more details)

Velocity

Data capture has become nearly instantaneous in this digital age thanks to new customer interaction points and technologies such as web sites, social media, smartphone apps, etc… but we are also still capturing data from traditional sources such as ATM data, point-of-sale devices and other transactional systems, etc … These kinds of rapid updates present new challenges to information systems. If you need to react in real-time to information traditional data processing technology simply will not suffice. Data is in most case only valuable when it is processed in real time and acted upon. Custom-tailored experiences like Amazon’s recommendation engine or personalized promotions are the new norm.

Variety of data

Much of the data is “unstructured” meaning that it doesn’t fit neatly into the columns and rows of a standard relational database. The current estimate is that 85% of all data is unstructured. Most social data is unstructured(such as book reviews on Amazon, blog comments, videos on YouTube, podcasts or tweets,…) but also clickstream data, sensor data from cars, ships, RFID tags, smart energy meters,… are prime examples of unstructured data.

Connected devices that track your every heartbeat and know if you are sleeping or running hold the promise to usher in an era of personalized medicine. The debate about whether the “Quantified self” is the holy grail of personalized medicine or just a hype is still ongoing.

Veracity

Veracity is all about the accuracy or “truth” of information being collected - since you will be unlocking and integrating data from external sources which you don’t control you will need to verify. Data quality and integrity of data are more important than ever. I will delve a little deeper into this topic in a future blog post.

As outlined in Big data opportunities in vertical industries (Gartner, 2013) – the challenge and also the opportunities differ by industry. But finally it is always about the value of the data

Value of data

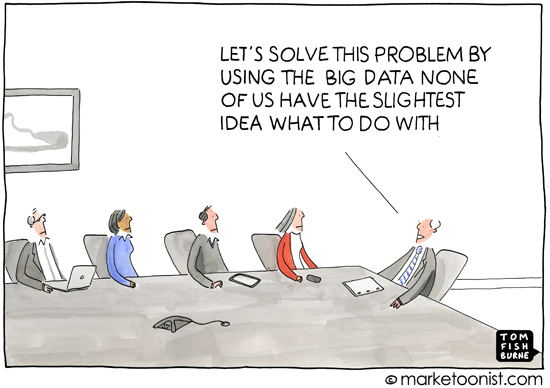

Approaching Big Data as a data management challenge is very one-sided. It’s not really important to know how many PB of ZB of data your business has accumulated, the issue is how to get value out of the data. The key here is analytics. Analytics is what makes big data come alive. But the nature of big of data will require us to change the way that we need to analyze this data. Traditional reporting and historical analytics will not suffice and are often not suited for big data. You will need to look at a predictive analytics, text analysis, data mining, machine learning, etc …

One of the most popular aspects of Big Data today is the realm of predictive analytics. This embraces a wide variety of techniques from statistics, modeling, machine learning and data mining, etc …. These tools can be used to analyze historical and current data and make reliable projections about future or otherwise unknown events. This means exploiting patterns within the data to identify anomalies or areas of unusualness. These anomalies can represent risks (e.g. fraud detection, propensity to churn,…) or business opportunities such as cross-sell and up-sell targets, credit scoring optimization or insurance underwriting.

Still a lot of challenges remain, according to the results of the Steria’s Business Intelligence Maturity Audit performed with 600 different companies in 20 different countries, only 7% of European companies consider Big Data to be relevant. On the other hand we have McKinsey predicting a 600 billion USD estimated revenue shift by 2020 to companies that use Big Data effectively (Source: McKinsey, 2013, Game changes: five opportunities for US growth and renewal). In general, companies seem to struggle, 56% of companies say getting value out of big data is a challenge and 33% say they are challenged to integrate data across multiple sources.

Tags van Technorati: Big data,big+data,analytics,predictive+analytics,EIM,extreme+information+management

No comments:

Post a Comment